client/audience

AImpower

timeframe

Aug 2023 - Dec 2023

role

UX Designer

Project Manager

Predicting the Quality of Wine

a study that evaluates the performance of various classifiers on a dataset using multiple metrics

Introduction & Description of Dataset

The goal of this project was to predict the quality of Portuguese "Vinho Verde" wines from a list of different attributes about the wine described in the table to the right. Our datasets originate from UC Irvine’s Machine Learning Repository. The dataset we used, entitled wine-quality-white-and-red.csv, combines two separate red and white wine datasets from this repository. The datasets also included a “type” variable and a “quality” variable. Type is either red or white depending on the wine, and quality is an integer value rating. The classes are ordered and not balanced, and there is no data regarding grape types, wine brand, pricing, etc. due to privacy reasons.

The “Quality” variable is discrete, in that it consists exclusively integers that range from 3 to 9. In theory, we can approach predicting this variable as a regression problem or as a classification problem. We decided that it would be best to approach it as a regression problem. Although the variable is not continuous, we believe that wines of similar quality ratings (i.e. 6,7 or 3,4) would have similar attributes. Consequently, we don’t think that ratings that are close to each other would have enough intrinsic differences (i.e. a wine rated with an 8 wouldn’t be extremely different from a wine rated with a 7) to justify using a classification model.

Data Preprocessing

In terms of preprocessing, we loaded the original CSV set, then split it into two separate data frames according to whether the type of wine was red or white. After filtering based on type, we removed the type column from dfRed and dfWhite as all data in the two respective sets are either all red or all white.

The dataset that was provided contained red and white wines together. There were significantly more whites (4898) than reds (1599). We deliberated on whether or not to analyze the two wine types together or separately. Analyzing the distribution of different variables between the two wine types, we can see that they vary significantly, especially certain variables like, sulfur dioxide, density, acidity and chlorides. Since reds and whites have such a significant difference in characteristics, analyzing them together would likely skew the results, so we decided to analyze them separately. The difference in quality ratings (the variable we are predicting for) was not significantly different between red and white, which further influenced our decision. Logically speaking, whether a wine is red or white shouldn’t factor into the overall quality of the wine, and we could expect that wine critics would rate reds and whites differently anyway.

Distribution of Quality Variable

Before performing the train/test split, an analysis of the quality variable was conducted to understand the distribution of wine qualities within our dataset. The quality variable ranged from 3 to 9 (inclusive), encompassing integer values representing different quality ratings.

The table to the right illustrates the distribution of wine qualities:

From this analysis, it was evident that wines classified with a quality rating of 5, 6 or 7 were significantly more prevalent within the dataset.

6

4

3

5

8

7

9

2836

216

30

2138

193

1079

Quality Rating

Number of Wine

5

Train/Test Split Procedure

Train/Test Split Methodology

Initially, the team considered utilizing the sample function to randomly split the dataset into training and testing sets. However, to ensure an equitable representation of specified categories within the quality column in both subsets, a decision was made to employ stratified sampling.

For this purpose, the createDataPartition function from the caret package was utilized. This function enabled us to create a stratified split that maintained the proportional representation of different quality ratings within the dataset across both the training and testing sets. This approach ensured that the distribution observed in the original dataset was preserved in both subsets, allowing for a more reliable evaluation of model performance.

Multiple Linear Regression

Simple linear regression predicts a response based on a single variable. This model assumes approximately a linear relationship between X and Y. Mathematically the relationship is modeled as Y is approximately Beta0 + Beta1*X. The coefficients beta 0 and beta 1 are unknown and they are estimated by minimizing the least squares. For multiple linear regression, this same approach is extended to multiple variables, including additional betas. In terms of the wine dataset, multiple linear regression was used to predict the variable quality (Y) using all the remaining variables in the dataset as predictors (fixed.acidity, volatile.acidity, citric.acid, residual.sugar, chlorides, free.sulfur.dioxide, total.sulfur.dioxide, density, pH, sulphates, and alcohol). Two multiple linear regression models were created, lm.red and lm.white. Linear regression is easily understandable and interpretable within the context of the problem. Furthermore, the approach is flexible and adaptable to data points. However, there are major disadvantages with the method because it is prone to overfit or underfit depending on data. It also assumes linearity, and this may not be generalizable to the real-world. It is also sensitive to outliers and noise in the data.

The red wine model resulted in a residual standard error of 0.648. The high R squared indicates that the model has a substantial amount of unexplained variability in the response variable. What is encouraging is that the model has some kind of explanatory characteristics due to its significant F-statistic and low p-value. The test MSE is 0.3953678.

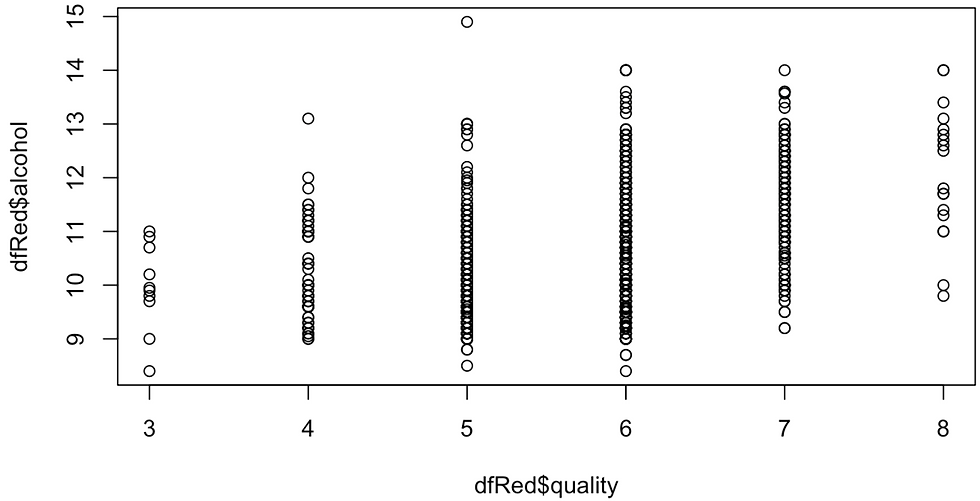

Exploratory analysis of the data revealed that there are highly rated wines with both high and low alcohol content. Most variables had a similar distribution.

The white wine model resulted in a residual standard error: 0.7514. Similar to the red wine model, the linear regression method leaves plenty to be desired in terms of better prediction. Again, the R squared is relatively high, but the p-value is low indicating high confidence in the F-statistic. The test MSE is 0.5847695.

When exploring the data, it is interesting to note that there aren’t any clear correlations between variables (similar results for red wines). This makes sense as the variables are all relatively distinct.

Ridge Regression and Lasso

Beyond MLR, we wanted to see if the data would fit well to Ridge and Lasso Regression models. The penalty imposed by the λ should help minimize any coefficients that are overemphasized by MLR. Furthermore, our data contains 11 predictors, which are all different physicochemical measurements of the wine. It’s difficult to say whether all of these components have a significant effect on wine quality in isolation, so Lasso regression should remove any predictors that do not have significant effects on wine critics’ ratings.

We utilized cross validation (cv.glmnet) to find the ideal lambda values for each of the datasets and regression methods, which can be seen here on the left.

We then fit the training data to each of the models and obtained MSE’s and coefficients that can be seen on the right (It should be noted that the glmnet package automatically standardizes the predictors before fitting, and converts the coefficients back to their original units afterwards).

Interestingly, the test MSEs for red wine are significantly lower than those for white wine. We’re not completely sure why this is the case, but it could be due to the fact that there are more datapoints for white wine compared to red wine, leading to more noise in the data and making it more difficult to fit. It could also be the case that white wine has more variability in quality compared to red wines in general, making it more difficult to predict.

Lasso has a (albeit not significantly) lower test MSE than ridge for both wine types, pointing to the idea that all of the factors have some influence on the quality, and should be taken into account.

For ridge regression, certain predictors have very similar coefficients between red and white wine, like alcohol or free sulfur dioxide. Both types have a very strong negative relationship with density, but white wine’s relationship is significantly larger. This would point to critics preferring higher alcohol content, in addition to preferring an overall “lighter” wine. On the other hand, pH has a positive relationship in white wines, whereas it’s negative for reds. This would point to acidity being preferred in red wines (since acidity decreases as pH decreases) compared to white wines, which are known for being sweeter or lighter. This is also supported by the coefficient for residual sugar, which is ten times larger for whites compared to reds, pointing to a higher preference for sweetness in white wine. Curiously, citric acid has a slight positive relationship with white wine quality compared to red wines; perhaps critics prefer sourness in whites compared to reds.

It’s also interesting to see the difference in predictor prioritization in the lasso regression for both wine types. They differ significantly, and the only shared predictors they have are volatile acidity (acetic acid content), chlorides, sulphates, and alcohol. Both models also chose to remove citric acid and density. This is particularly curious, since density has such a high coefficient in the ridge model.

Ultimately, the MSEs for Ridge and Lasso are very similar to MLR, so we wanted to explore other models to fit the data on and find more accurate predictions.

Regression Tree

Regression trees can be applied to both regression and classification. We applied it as a regression. The model involves first dividing up the predictor space into distinct regions and non-overlapping regions through recursive binary splitting and each split creates two new branches further down the tree. Predictions are made based on the mean response values of training observations within that region. The goal is to find predictors and cutpoints to minimize the Residual Sum of Squares or RSS at each step. This iterative process continues until a stopping criterion, such as a specified number of observations per region, is met.

One benefit to using regression trees is that it’s easy to interpret and visualize as it offers a quick way to identify relationships between variables and the most significant variable. Additionally, decision trees demand less effort in data preprocessing compared to other algorithms since they handle categorical and numerical data without requiring normalization. Some of the potential drawbacks to this approach include that trees are non-robust, so a small change in the data can cause a large change in the final estimated tree. Overfitting is also another challenge in decision tree models, however, solutions like pruning are effective to mitigate overfitting. The process of pruning in decision trees involves finding an optimal tuning parameter and tree size which can be selected using cross-validation as it helps to identify the optimal trade-off point between model complexity and performance.

As regression trees automatically use its own hyperparameters and select features that yield the highest training score during the training process, the features used in red wine are alcohol, sulphates, volatile acidity, and total sulfur dioxide. In white wines, the features used are alcohol, volatile acidity, and free sulfur dioxide.

Ridge

Lasso

Lasso

Ridge

White Wine

Red Wine

λ = 0.0390

λ = 0.0385

λ = 0.0049

λ = 0.0112

Test MSE: 0.5593

Test MSE: 0.3958

Test MSE: 0.5599

Test MSE: 0.3993

Test MSE

After Pruning

Before Pruning

White Wine

Red Wine

0.579197

0.437901

Bootstrap Aggregation (Bagging)

The bootstrap aggregation approach (bagging), involves generating B different bootstrapped training datasets by taking repeated samples from the single training data. B regression trees are constructed, each based on the bootstrapped training set. The key characteristic of these trees is that they are without any pruning and bagging helps mitigate the high variance and low bias that comes from a lack of pruning by averaging the predictions of all B trees. However, it is important to note that Bagging introduces a loss of interpretability of a model and is computationally expensive (if the dataset and number of predictors are large).

As bagging is a special case of a random forest with m = p, where the number of variables to sample per split is equal to total predictor variables, we used the randomForest() function to perform both random forests and bagging.

Red Wine

Test MSE: 0.293348

White Wine

Test MSE: 0.367957

Random Forests

Random Forests is an enhancement over bagged trees as it introduces a key modification that addresses the issue of correlated trees. This reduces variance when averaging predictions. Similar to bagging, random forests build multiple decision trees on bootstrapped training samples. However, in the selection of split candidates during the construction of each tree, only a random subset of predictors is considered from the full set of predictors at each split in a tree. This randomization ensures that the algorithm is not allowed to consider a majority of available predictors at any given split, promoting decorrelation among the trees such that other predictors will have more of a chance.

A potential benefit to using Random Forests is its ability to capture both linear and non-linear relationships. Additionally, no scaling or transformation of variables is usually necessary. Random Forests implicitly perform feature selection and generate uncorrelated decision trees, which is good for data with a high number of features. However, they are not as easily interpretable and can be computationally expensive at times.

In the case of our wine data, we once again used the randomforest() function. This time, to optimize the performance of each random forest model, we performed a grid search to determine the most optimal value for the mtry parameter, which is 1 for both red and white wine. The models are then assessed with a test MSE. We conducted extensive testing on several models, evaluating the performance on all features selected versus a subset of features. The final results indicated that, for both red wine and white wine, the model performs best when all features are included.

Further, we also measured variable importance using the importance() function on the trained model, which gives us insights into the effect of each attribute to the overall performance. The two metrics – %IncMSE and IncNodePurity – offer different perspectives on the importance of each variable. %IncMSE is the percent increase in MSE and a high value of %IncMSE usually entails that the variable will significantly increase the model's MSE and deteriorate its predictive accuracy if excluded, which suggests the variable’s importance. IncNodePurity, on the other hand, is useful for understanding the role of variables in the structure of the tree and a high IncNodePurity value usually indicates that the variable contributes significantly to the creation of well separated nodes in the decision tree. For red wine, the random forest model places the highest importance on volatile acidity, alcohol content, and sulphates when predicting quality. For white wine, variables with the most importance are alcohol, free sulpher dioxide, and volatile acidity.

Feature

Importance

Test MSE

(All Features)

Test MSE (subset 1)

Test MSE (subset 2)

Test MSE (subset 3)

Red Wine

0.319368

0.336236

0.321539

0.325735

White Wine

0.378758

0.460660

0.421388

0.445476

Conclusion and Limitations

In this study, we assessed the performance of various classifiers on the dataset using multiple metrics. From our analysis, it's evident that certain models, such as Multiple Linear Regression, Ridge, and Lasso, exhibited moderate predictive capabilities, but tree-based models had lower test mean squared error (our measure of model efficacy) and the method with the lowest test MSE was Bootstrap Aggregation.

Despite the comprehensive evaluation of various classifiers, this study encountered several limitations. Firstly, our dataset did not have an equitable representation of specified categories within the quality column (our response variable), which may have resulted in biased model performance. Since our models were trained on an imbalanced dataset, they tend to favor the majority class, which leads to biased predictions. Specifically they might overlook or misclassify minority classes, reducing the overall accuracy and reliability of the model. Moreover, the dataset might have contained inherent biases or noise (especially in these underrepresented categories), also impacting the models' learning capabilities and overall predictive accuracy.

In conclusion, while this study provided insights into the performances of various classifiers on the given dataset, further research involving feature engineering, hyperparameter tuning, and potentially exploring alternative modeling techniques could enhance the predictive capabilities and robustness of the models.

Classifiers

Test MSE

for Red Wine

Test MSE

for White Wine

Multiple Linear Regression

0.5847

0.3953

Ridge

0.5593

0.3958

Lasso

0.5599

0.3993

Regression Tree

0.5792

0.4379

Bootstrap Aggregation

0.3680

0.2933

Random Forest

0.3788

0.3194